To accelerate with DevOps, challenges must be identified and removed. In this, the second of two blogs exploring the value you can achieve with DevOps solutions, Strategic Account Consultant Harrison Kirby takes us through common constraints you might come across.

As we covered in my first blog, the only way to move faster with DevOps is through identifying and removing constraints. But to accelerate even further, you need to look beyond reacting to existing constraints and pre-empt what constraints might be hiding behind the corner. Just what might those constraints look like?

How can you accelerate with DevOps?

Let’s assume we own an enterprise social media company with a unique product offering. It’s imperative we can release new features and fix bugs faster than our competition. It’s obvious to the business that we are not performing, since changes take weeks to progress into production, and value is not reaching the end users in a timely fashion. We need to identify our constraints and remedy as soon as possible – here’s how DevOps can help in this scenario.

Let’s pick up our value stream mapping technique from my first blog. To recap, we found that deployment into production failure rate is 30% and deployment process time (the time it takes to deploy the product) is five hours. We know we need two things to be successful with deployment: reliability and efficiency. The need to deploy five times a day at four hours each is neither reliable nor efficient, so how can we improve that?

There are many constraints that could cause high deployment failure rates and slow deployment across the spectrum of people, process and technology, and many symptoms that may arise to the surface.

In this instance, there are many likely causes, but let’s assume for the purposes of this scenario that our code quality is very high and that the output from the test environment is very positive, leaving a likely discrepancy between the test and production environment, as well as the deployment pipeline itself.

Upon running an assessment, it becomes obvious that there are a number of differences in both configuration and infrastructure between test and production (for example, test is not load-balanced, but production is) that are likely to be attributing to the issue. This is known as environment drift. Looking back further, it appears both environments have been created manually. So how do we increase our reliability and efficiency using a DevOps approach?

Addressing reliability with DevOps

To increase reliability, we need to prevent environment drift, and to do this we must be able to have a consistent and reliable approach to infrastructure provision and configuration, which is what Infrastructure as Code (IaC) can provide. This is where we take a coded approach to provision infrastructure as opposed to a manual approach. The components of IaC are:

- Coding: the process of writing machine readable definition files.

- Version control: the process of storing and maintaining coded artefacts.

- Deployment: the process of applying definition files to a target infrastructure without manual intervention.

- Tooling:

- Imperative and declarative tools (such as BICEP, Terraform, Powershell, Ansible and ARM templates) to support the coding and deployment of files.

- Version control systems (Azure Repos, Git) to support the version control component. Pipeline tools (Azure DevOps, Jenkins) to apply changes in an automated fully repeatable fashion.

Code by its very nature exists as a state in version control, and therefore at a principled level, using our deployment pipeline, we can apply the same environment state wherever we like and get the same output. We can mitigate environment drift and in turn reduce the deployment failure rate to improve reliability.

Declarative tooling such as Terraform helps us further by managing dependencies and differences automatically, ensuring consistency across the environment state. We simply declare what we want and let the tool figure out what needs to happen.

In our scenario, this would mean that test and production would be a mirror of one another from an infrastructure component and configuration perspective, thereby ensuring that functionality in test behaves the same in production. While this is seemingly easier on a greenfield project, thanks to the rise of reverse engineering tooling such as Terraform, it is also highly achievable on brownfield projects such as our scenario.

In addition, reverse engineering capability provides new opportunity to increase flow between prototyping and delivery phases. For instance, the Government Digital Service (GDS) splits agile delivery into five phases: discovery, alpha, beta, live and retirement. The alpha phase is a timebound phase where an organisation trials different solutions to a problem and aims to chooses one to take forward.

Due to the nature of rapid trial and error, infrastructure components are created in the quickest way possible (via a portal) and there’s minimal effort put into reusability. Therefore, if a solution is arrived at in alpha to take to beta, the default approach is to start a productionised and codified build from scratch. However, thanks to reverse engineering tooling, it’s possible to use the alpha build created via the portal for the productionised build, saving a significant amount of effort and, importantly, reducing the time to develop and release the product to the end user.

Addressing efficiency with DevOps

Reducing the probability of environment drift will naturally help efficiency, but we could also look at our build and release pipelines. Automated build and release pipelines are becoming the new norm, but further optimisation can be sought to improve wait and process times.

The build pipeline is responsible for building and compiling application code and the release pipeline is responsible for deployment of the built artefacts. By analysing both pipelines using value stream mapping we can identify where the bottlenecks lie.

For instance, we may be dependent on manual steps as part of our deployment, which are subject to human error, potentially attributing to the failure rate. We may be rebuilding every time a new deployment is executed instead of using an artefact repository to store pre-built code. By taking a fully automated approach and reusing what has already been built, we can reduce the wait and process times dramatically.

We can also evaluate options such as parallel running, adding more agents and using caching to achieve decreases in duration. Going further, we can evaluate architecture to see whether the adoption of more loosely coupled architecture away from a monolithic structure would aid the ability to build and release rapidly.

Your DevOps solutions: additional and alternative techniques

As with most things, there is no silver bullet for challenges with DevOps due to differing contexts made up of certain types of environments, organisation, culture, process and technology, which all play into a constellation of complexity, hence my open definition being: “DevOps is whatever you need to do to deliver value reliably and efficiently.”

The trick is to treat DevOps as a toolkit of solutions and pick the right tools for your job. Here are two more examples of DevOps techniques that could help in the scenario above.

Containers and container orchestration

Containers are an isolated lightweight, standalone, executable package of software that includes everything needed to run an application, including code, runtime, system tools, system libraries and settings. By their very nature, they are extremely and reliably portable between environments, going a long way to mitigate environment drift – the same container image developed on a local machine can be deployed through the path to live into production, ensuring parity.

While working with a few dozen container apps is relatively straightforward, operations can quickly become complicated as volumes increase into enterprise scale. This is where container orchestration systems can be utilised to simplify the process of managing containers while increasing security posture and resilience. The most common is Kubernetes, which is an open-source system that automates deployment, scaling and management of containerised applications.

Automated testing

How often does a deployment fail because something, somewhere, has changed and not been tested? Through testing we must validate that the desired functionality has been achieved while ensuring that previously working functionality, system performance and security posture has not been degraded.

By taking an automated approach we can vastly reduce the test process time and reduce test failure rate. Types of automated testing include unit tests, static code analysis, dynamic code analysis, functional testing, synthetic testing, performance testing and security testing. We can engineer these into our pipelines to run on an automated basis to achieve full spectrum assurance.

The ‘just in time’ nature of automated testing within pipelines also enables rapid feedback from build and/or deployed code, which allows for quick resolution while the code base is new, and the code change fresh in an individual’s memory.

Value is your DevOps end goal

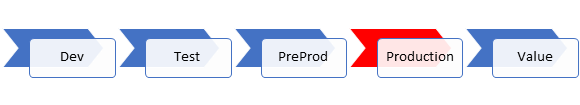

All in all, the application of these DevOps techniques can create value for end users by increasing reliability and efficiency, so that the latest feature can be rolled out quickly.

There isn’t a single right answer here, but what’s clear is that we need to strive towards excellence, challenge the status quo and do whatever is necessary to deliver value reliably and efficiently.

Join Content+Cloud Digital Revolution³ on-demand virtual event

At this virtual event you’ll pick up the latest practical advice from the people who understand Microsoft technologies best. Each online session explores the digital imperative facing all organisations: embrace the potential of technology or be left behind.